Designing a Clinical AI Agent With Traceable Actions and Enterprise-Grade RBAC

Summary:

The rise of clinical AI agents marks a pivotal moment in healthcare’s digital transformation. Beyond simple automation, these AI-powered clinical assistants are becoming context-aware collaborators, able to read medical documentation, reason over complex data, and assist clinicians in real-time decision-making.

But as these systems gain autonomy, new challenges emerge:

Can we trust their actions?

Can we verify what they did, why, and who authorized it?

In an era governed by HIPAA, GDPR, and FDA SaMD regulations, traceability and role-based access control (RBAC) are not optional but fundamental.

A traceable clinical AI agent ensures that every recommendation, data access, and inference is auditable, explainable, and compliant.

At the same time, enterprise-grade RBAC acts as the digital immune system, preventing unauthorized data exposure and maintaining the principle of least privilege.

Together, these two pillars, traceability and RBAC, transform clinical AI from a black box into a transparent, accountable, and ethical digital colleague.

This article explores how to architect, implement, and scale a traceable healthcare AI agent ready for real-world deployment, blending insights from clinical informatics, cybersecurity, and enterprise engineering.

Key Takeaways

- Traceability is trust, and every AI action should leave a clear, auditable footprint.

- RBAC is your digital shield that defines and enforces roles early to prevent privilege creep.

- Explainability equals adoption because clinicians trust systems that justify their reasoning.

- Compliance isn’t a checkbox; it’s architecture, as HIPAA and ISO must be built in, not bolted on.

- EHR integration drives value by embedding AI within the existing clinical workflow.

- Human-in-the-loop oversight remains non-negotiable, and AI should augment, not replace, clinical judgment.

- Traceable, secure AI agents improve efficiency and patient trust.

- Privacy, fairness, and interpretability are key to sustainable innovation.

The Evolution of the Clinical AI Agent

Early medical AI systems were rule-based decision support tools, rigidly programmed to suggest diagnoses or flag anomalies.

Then came machine learning models capable of pattern recognition, followed by generative clinical agents capable of summarizing notes, drafting discharge summaries, and reasoning over electronic health records (EHRs).

A modern clinical AI agent is more than an algorithm; it’s an agentic AI system capable of perception, reasoning, and controlled action within defined boundaries.

It interprets clinical workflows, interacts with EHR data, collaborates with care teams, and justifies its actions through explainable AI (XAI) frameworks.

To build such a system responsibly, two foundations must be established from day one:

- Traceability: the ability to reconstruct every action, decision, and access point.

- RBAC: ensuring only authorized users (clinicians, nurses, admins) can access specific functions or patient data.

When these are properly implemented, you get a traceable, explainable, and compliant clinical decision agent that can safely operate in regulated healthcare environments.

| 86% of healthcare organizations say they’re already extensively using AI, and a global healthcare AI market projection exceeding $120 billion by 2028 |

Traceability: The Audit Trail of Trust

Traceability in clinical AI systems means every model inference, prompt, and user interaction is logged, versioned, and attributed.

The Four Pillars of Traceability

- Input Logging: Every user prompt, context, or dataset fed into the agent is timestamped and stored securely.

- Output Attribution: The model version, training data source, and confidence level for each response are recorded.

- Data Provenance: Tracks the lineage of medical data—where it originated, how it was transformed, and who viewed it.

- Explainability Hooks: Links outputs to clinical evidence, literature, or structured data.

Note: This system allows auditors or clinicians to reconstruct any decision, what was recommended, by whom, when, and why.

Clinical AI agents must integrate immutable audit trails (often using blockchain or append-only databases) that meet HIPAA, GDPR, ISO 27001, and SOC 2 compliance standards.

This not only satisfies regulators, but it also reassures clinicians that AI is working with them, not around them.

Enterprise-Grade RBAC: Enforcing Digital Boundaries

Role-Based Access Control (RBAC) is the foundation of security for every EHR-integrated AI agent. It defines who can do what, where, and when.

For a clinical AI assistant, that might look like:

- Physicians: Full access to patient data, able to validate AI recommendations.

- Nurses: Limited data access for vitals and treatment records.

- Admins: Access to scheduling, billing, and non-clinical workflows.

| Modern RBAC implementations use identity platforms like WorkOS, Okta, or Stytch for authentication and OAuth 2.0 or OpenID Connect for authorization. |

The combination of traceable logs + RBAC policies ensures that every AI action is contextually authorized and auditable.

If an unauthorized agent action occurs, it can be traced back instantly—who triggered it, under what role, and with what privileges.

Designing a Traceable Clinical AI Architecture

A secure and transparent clinical AI agent, like other AI agents for healthcare automation, relies on several architectural layers working in harmony:

- Perception Layer: Gathers structured and unstructured clinical data from EHR systems, labs, and imaging sources.

- Reasoning Engine: A fine-tuned LLM (like GPT-4o or Llama 3-Med) augmented with medical ontologies (SNOMED CT, ICD-10, CPT codes).

- Action Layer: Executes predefined actions such as drafting notes or alerting physicians

- Traceability Layer: Maintains an immutable audit trail and stores reasoning logs.

- RBAC Layer: Integrates enterprise identity providers for authentication and contextual access.

- Compliance Layer: Ensures alignment with HIPAA, FDA SaMD, and ISO/IEC 62304 standards.

Know That: This modular stack enables scalability and accountability, the two key metrics for enterprise-grade deployment.

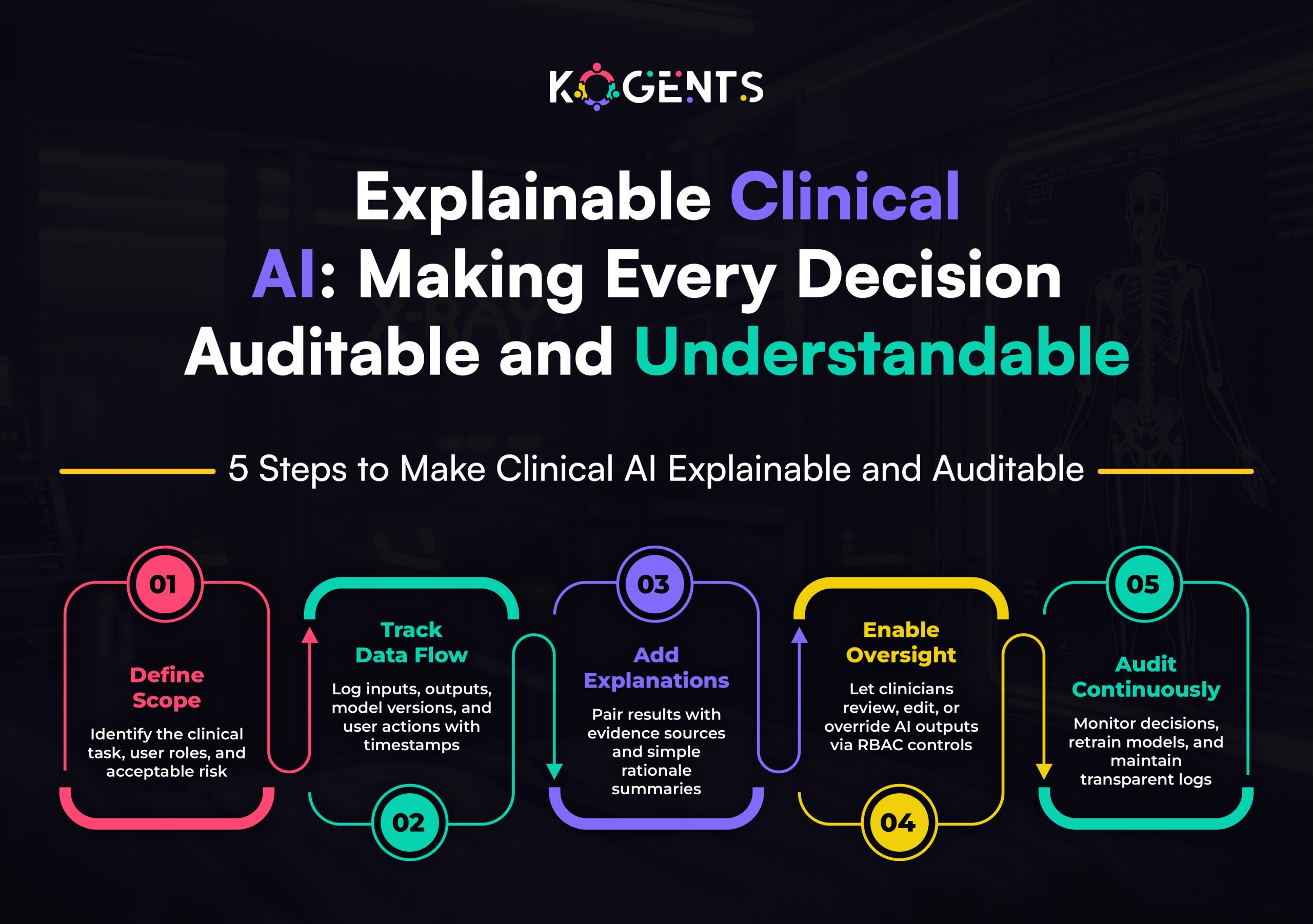

Explainability and Human Oversight

Transparency is a clinical necessity. Clinicians must be able to see and understand why an agent concluded.

Explainability Techniques

- Evidence Linking: Attach each output to source data (lab values, radiology notes).

- Rationale Narratives: Use natural language explanations to describe decision logic.

- Visual Explainability: Employ attention heatmaps to highlight influential data features.

- Human Override Loops: Enable clinicians to correct, annotate, or veto AI actions.

Insight: Explainable AI (XAI) ensures that clinical decisions remain human-supervised, aligning with FDA AI/ML-based SaMD guidelines.

As Hippocratic AI and Heidi Health demonstrate, clinicians trust AI systems that show their reasoning, not just their answers.

Case Study 1: Oracle Health’s AI Documentation Agent

Oracle Health launched an AI clinical documentation agent to automate chart summarization and reduce clinician burnout.

Implementation Highlights:

- Integrated with Epic and Cerner EHRs using FHIR APIs.

- Each note generation was traceable, storing the clinician ID, prompt, model version, and output rationale.

- RBAC rules governed access, ensuring clinicians could only summarize their own patients.

- Used ISO 27001-certified cloud infrastructure for audit log storage.

Outcome: Oracle Health reported a massive reduction in charting time and 100% compliance in privacy audits. The project became a benchmark for traceable AI deployments in enterprise healthcare.

Case Study 2: Heidi Health – Explainable AI Scribe

Heidi Health, an Australian medtech company, developed an intelligent clinical scribe that transforms doctor-patient conversations into medical notes.

Key Innovations:

- Fine-tuned LLMs with domain-specific grounding on Australian healthcare standards.

- Role-specific RBAC profiles for doctors, nurses, and administrative staff.

- The traceability layer stored every transcription and reasoning path.

- Achieved compliance with Australian Digital Health Agency security benchmarks.

Impact: Clinician note-taking time dropped by more than half %, and user trust ratings rose exponentially, thanks to transparent reasoning explanations.

The Compliance Intersection: Where Traceability Meets RBAC

Regulations like HIPAA, GDPR, and FDA SaMD emphasize traceability and access control.

Here’s how they intersect:

- HIPAA: Demands secure PHI handling → enforced via RBAC and encrypted audit logs.

- GDPR: Mandates data minimization and transparency → achieved through access restrictions and trace logs.

- FDA SaMD: Requires algorithm transparency → supported by versioned model registries and explainable outputs.

- ISO 27001/SOC 2: Focuses on security management → enabled by continuous monitoring and access logging.

Together, these frameworks ensure your clinical AI agent, including those supporting remote health monitoring, is not only functional but certifiably compliant.

Clinical AI Agent vs Other Healthcare Tools

| Feature | Clinical AI Agent | Medical Chatbot | Rule-Based CDS |

| Context Awareness | Deep (EHR-integrated) | Surface-level | Static logic |

| Traceability | Full audit trail | Minimal | Partial |

| Explainability | Built-in rationales | Limited | Deterministic |

| RBAC Integration | Enterprise-grade | Rare | Moderate |

| Adaptability | Self-learning | Scripted | Rule-based |

| Compliance Readiness | High (HIPAA, ISO, GDPR) | Low | Medium |

Conclusion: A traceable, RBAC-secured clinical AI agent represents the future of compliant healthcare automation, combining intelligence with interpretability.

Best Practices for Builders and Healthcare Entrepreneurs

- Design for compliance first, not later. Integrate HIPAA and ISO controls from the start.

- Start small: Deploy one high-value workflow, like discharge summaries or lab result triage.

- Automate your audits: Use AWS Audit Manager or Google Cloud Assured Workloads for compliance tracking.

- Use de-identified data during training to maintain privacy.

- Implement federated learning to train models without centralizing PHI.

- Red-team your AI: Simulate malicious prompts to detect vulnerabilities.

- Integrate explainability dashboards for clinicians.

- Version everything: Models, prompts, and datasets must all be logged.

- AI remote health Monitoring: it continuously tracks performance drift, latency, and data leakage.

- Collaborate with clinical governance boards to align with institutional ethics.

Design the Future of Responsible Clinical AI With Us!

Designing a clinical AI agent with traceable actions and enterprise-grade RBAC is not merely a technical endeavor; it’s a moral and strategic one.

AI in telemedicine needs trust as a core, and trust is built on transparency.

The future of healthcare belongs to intelligent, explainable, and compliant AI systems that can reason like clinicians, act with precision, and justify every decision.

As agents become more autonomous, from medical scribes to diagnostic collaborators, traceability and RBAC will define their legitimacy.

By combining EHR integration, data provenance, explainability, and secure RBAC, organizations can deploy agents that are as safe as they are smart.

Healthcare entrepreneurs, solopreneurs, and innovators now have the tools to create systems that respect both patients’ privacy and clinicians’ expertise, bridging innovation with integrity.

Have a look at how Kogents empowers clinicians and innovators to deploy trusted, compliant AI agents. To get in touch, call us at +1 (267) 248-9454 or drop an email at info@kogents.ai.

FAQs

What is a clinical AI agent?

A reasoning and workflow automation system designed for clinical environments, integrating with EHRs to assist in documentation, decision support, and patient management.

How do traceable AI actions enhance compliance?

They provide auditability for every model inference, satisfying HIPAA and FDA documentation requirements.

Why is RBAC critical in healthcare AI?

It restricts access to sensitive patient data and prevents unauthorized use, supporting least-privilege principles.

Are clinical AI agents explainable?

Yes, modern systems include reasoning summaries, confidence indicators, and evidence links.

Can clinical AI agents integrate with EHRs like Epic or Cerner?

Yes, through FHIR-based APIs or direct SDK integrations.

How can a startup build a compliant clinical agent?

Start with open frameworks like LangChain or LlamaIndex, add audit logging, and ensure encryption.

Do any FDA-approved AI agents exist?

Yes, several diagnostic AI tools (e.g., IDx-DR, Viz.ai) are FDA-cleared under SaMD.

What is the biggest challenge in deploying clinical agents?

Balancing innovation with compliance—especially managing data governance and explainability.

How can clinicians trust AI decisions?

Through explainable reasoning, version control, and real-time visibility into decision paths.

What’s the next evolution for clinical AI agents?

Agentic AI ecosystems—multi-agent systems collaborating across care teams, governed by transparent traceability and federated RBAC frameworks.

FAQs

A reasoning and workflow automation system designed for clinical environments, integrating with EHRs to assist in documentation, decision support, and patient management.

They provide auditability for every model inference, satisfying HIPAA and FDA documentation requirements.

It restricts access to sensitive patient data and prevents unauthorized use, supporting least-privilege principles.

Yes, modern systems include reasoning summaries, confidence indicators, and evidence links.

Yes, through FHIR-based APIs or direct SDK integrations.

Start with open frameworks like LangChain or LlamaIndex, add audit logging, and ensure encryption.

Yes, several diagnostic AI tools (e.g., IDx-DR, Viz.ai) are FDA-cleared under SaMD.

Balancing innovation with compliance—especially managing data governance and explainability.

Through explainable reasoning, version control, and real-time visibility into decision paths.

Agentic AI ecosystems—multi-agent systems collaborating across care teams, governed by transparent traceability and federated RBAC frameworks.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.