How an automated grading system handles scalability

Summary:

Education and business are experiencing a digital revolution, and automated grading systems are at the forefront of this transformation.

As schools, universities, and online platforms scale globally, the demand for accurate, fair, and efficient grading has skyrocketed. Traditional manual grading simply can’t keep up with the massive surge in online exams, essays, and digital learning assessments.

This is where AI-driven automated grading software shines.

It ensures not only speed and accuracy but also scalability, the ability to handle thousands, even millions, of assessments across diverse learning environments without sacrificing quality. For entrepreneurs, solopreneurs, and educators, understanding this technology is essential to staying ahead in the ever-expanding EdTech landscape.

Key Takeaways

- Understanding how automated grading systems make large-scale assessments faster and more efficient.

- Learning why instant, consistent feedback is key to improving student motivation and performance.

- Exploring how these systems save educators time and reduce grading stress.

- Discovering how AI-powered tools handle peak loads and maintain fairness.

- Seeing how scalable grading supports growth for courses, bootcamps, and EdTech platforms.

What Are AI Agents in Automated Grading Systems?

At the heart of today’s automated grading system are AI agents, intelligent software modules designed to mimic human evaluators.

They rely on machine learning (ML), natural language processing (NLP), and deep learning models to analyze student responses, essays, and exam answers.

Think of AI agents as virtual teaching assistants that never tire. They can:

- Score multiple-choice tests with computerized assessment systems.

- Evaluate essays using automatic essay scoring systems.

- Provide instant feedback via intelligent assessment platforms.

Pro Tip for Entrepreneurs: If you’re building a learning product, consider integrating AI agents into your Learning Management System (LMS) for seamless scaling.

Why Entrepreneurs, Solopreneurs & Educators Need an Automated Grading System?

At its core, an automated grading system uses AI to evaluate assessments (MCQs, short answers, essays, code, and more) and return scores + feedback with minimal human touch.

For fast-growing programs, bootcamps, MOOCs, corporate academies, and district-wide rollouts, manual grading quickly becomes the bottleneck.

Soft-reminder: AI grading removes that bottleneck, so you can scale without multiplying graders.

The business case

- Throughput at peak load: Run thousands of submissions per minute with cloud auto-scaling instead of paying weekend overtime.

- Consistency: The same rubric, the same outputs, every time, reduces variability that creeps in with large grader teams. Studies have shown that, in certain contexts, automated essay scoring can match or exceed human-to-human agreement, especially for well-defined writing tasks.

- Time-to-feedback: Faster feedback improves learner momentum and retention—critical for subscription or cohort models.

- Data at your fingertips: Item-level analytics, rubric dimensions, and error categories surface what to fix in your content.

Educator’s angle

Teachers reclaim hours to focus on higher-order feedback and mentoring.

Many platforms embed grading directly into the LMS workflow to centralize progress, regrading, and reporting.

Soft reminder: if your courses spike (e.g., viral enrollments), you can’t “staff-up” graders overnight, then know that Automation is capacity insurance.

How AI Agents Work in an Automated Grading System to Handle Scalability?

- Ingestion via LMS/CBT: Submissions enter through your LMS or testing platform (web, mobile, proctoring app).

- Queue + Orchestration: Jobs land in a task queue (e.g., XQueue in Open edX) to decouple submission from scoring, smooth spikes, and support retries.

- Model services:

- Objective items (MCQ/TF): deterministic keys and item banks.

- Short answers: semantic matching + pattern rules + answer grouping. (Turnitin’s Gradescope groups similar answers for bulk actions.)

- Essays: NLP models (feature-based + neural) extract grammar, organization, development, and coherence features, then predict a score aligned to a rubric.

- Objective items (MCQ/TF): deterministic keys and item banks.

Example: ETS’s e-rater is a canonical example.

- Programming tasks: sandboxed autograders (often Docker-based) run test suites and return structured feedback.

Example: Coursera’s autograder toolkit illustrates the approach.

- Feedback generation: Trait-level comments and rubric-aligned notes get assembled into a human-readable response.

- Storage + Reporting: Scores, rubrics, and analytics write back to the LMS gradebook with dashboards for instructors/ops.

- Auto-scaling: Cloud instances scale horizontally as queues grow, enabling burst capacity during deadlines.

Why does this scale?

- Stateless model endpoints + asynchronous queues absorb spikes.

- Batch + streaming modes let you prioritize “instant results” or cost-efficient overnight runs.

- Cache + shard by course or assessment to keep latency low and costs bounded.

Reality check: For free-response writing, credible vendors combine AI + human review at the edges (spot checks, appeals) to maintain trust and address nuanced prompts. ETS

Core Features of Modern AI Agents in Grading Systems

- Rubric alignment & explainability. The model doesn’t just output a number; it maps to rubric criteria (organization, mechanics, development).

ETS’s e-rater explicitly extracts features tied to writing constructs, supporting transparency in feedback.

- Answer grouping & bulk actions. Cluster similar short answers to apply one decision to hundreds at once (Gradescope’s well-known timesaver).

- Human-in-the-loop (HITL). Smart escalation when confidence is low, plus regrading and appeals.

- Bias and drift monitoring. Track subgroup error rates and model drift; apply mitigations and periodic recalibration.

Example: Brookings outlines practical bias-mitigation frames you can adapt.

- Security & integrity hooks. Proctoring data, audit trails, and anomaly detection (e.g., sudden answer-time spikes).

- Content-aware feedback. Trait-level comments, error highlights, and suggestions that teach (not just score).

- DevOps for ML. Canary models, versioned rubrics, offline validation suites, and rollback plans.

- LMS integration. Gradebook sync, regrade APIs, and role-based access to keep ops lightweight.

Example: Open edX illustrates external grader patterns.

Use Cases for Entrepreneurs, Solopreneurs & Educators

Entrepreneurs (EdTech founders).

- SaaS assessment platforms: White-label your AI-powered grading for schools and training orgs.

- Marketplace instructors: Let creators import rubrics and auto-grade at scale; monetize via per-assessment pricing.

- Certification engines: Mix secure proctoring with AI scoring to run rolling, global exams.

Solopreneurs (cohort/course creators).

- Bootcamps & nano-degrees: Autograde code + quizzes; use answer grouping on short answers to reduce your weekend grading.

- Content accelerators: Get instant analytics on what learners miss, then re-record only the weak spots.

Educators (K-12/Higher-Ed)

- Large sections/MOOCs: Scale to thousands with LMS-native autograding and AI agents for education or external graders (Open edX, Coursera).

- Writing-intensive courses: Use AI to triage grammar/organization issues, then focus human time on argument quality. (e-rater/IEA shows how NLP supports this.)

Corporate L&D.

- Skills verification: Code, case analyses, and scenario responses auto-scored with structured rubrics and immediate coaching tips.

- Compliance at scale: Rapid, consistent scoring across regions/time zones.

Benefits of Automated Grading Systems for Scalability

- Speed + consistency: Feedback within minutes, with stable rubric adherence—even at 10× volume. Research shows AES can reliably approximate human raters in many settings, making turnaround times practical at scale.

- Operational elasticity: Cloud auto-scaling absorbs deadline rushes without pre-booking graders.

- Learning analytics: Item-level difficulty, distractor analysis, rubric-dimension trends, content decisions become data-driven.

- Teacher time reclaimed: Shift human effort to coaching, projects, and interventions.

- Global reach: Multilingual assessment pipelines (speech + writing) already exist in vendors like Pearson (Versant, IEA).

Automated Grading System vs Other Tools (Comparison Table)

| Feature | Automated Grading System | Traditional Grading | Outsourced Grading |

| Speed | Instant | Slow | Moderate |

| Scalability | High (cloud-enabled) | Limited | Medium |

| Cost | Lower long-term | High | High |

| Accuracy | Consistent, bias-checked | Human error | Variable |

| Feedback | Rich + instant | Delayed | Delayed |

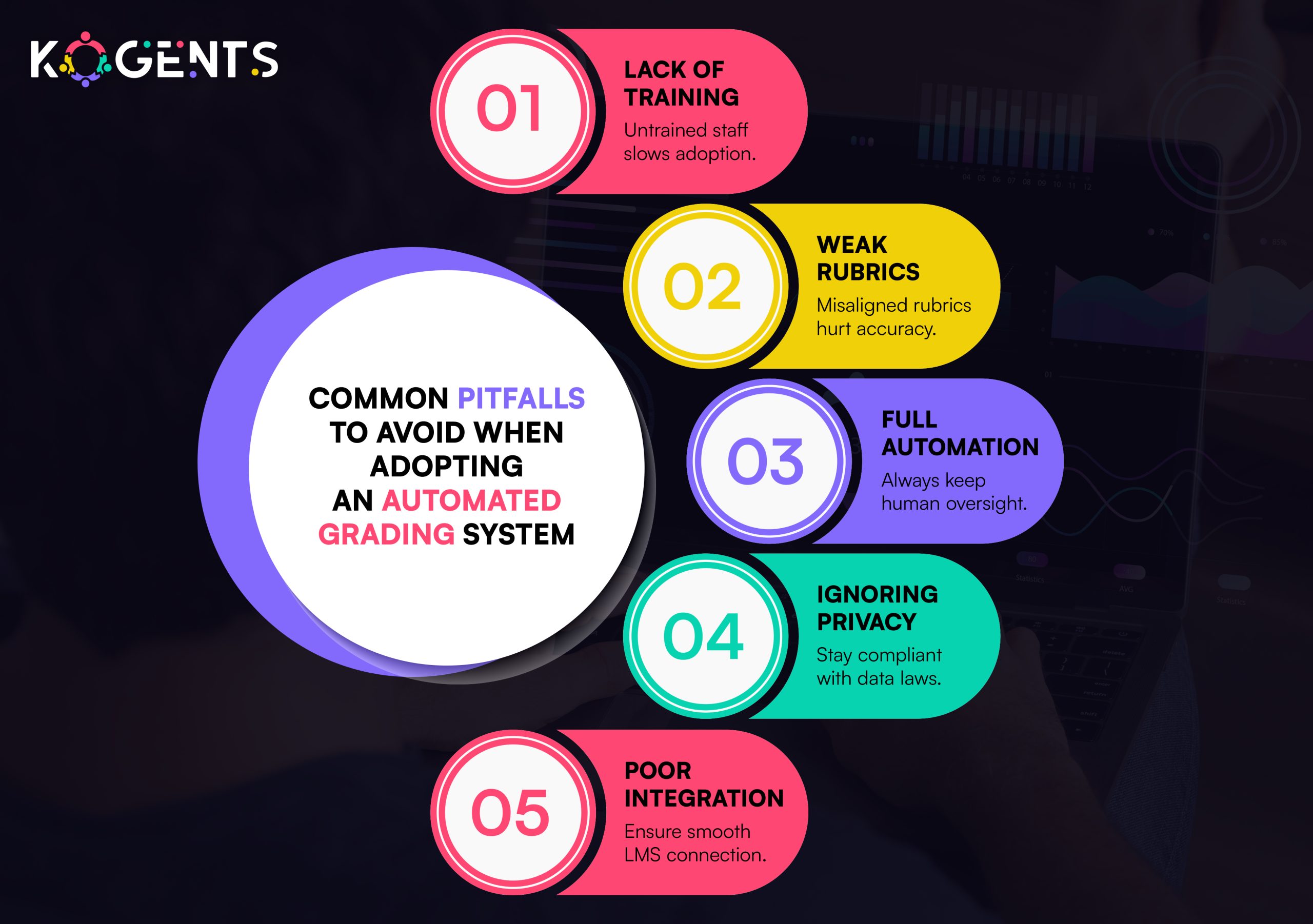

Challenges & Limitations of Automated Grading Systems

Validity of complex writing

- AI excels at structure/grammar features but can miss argument quality or originality.

- Critics like Les Perelman have shown ways to “game” some AES engines with length and obscure vocabulary, underscoring the need for human oversight in high-stakes contexts.

Bias and fairness

- Models trained on historical data may reflect subgroup biases.

- Adopt bias detection/mitigation practices and publish impact assessments.

Transparency & appeals

- Learners (and accreditors) need explanations and a clear appeal path.

- Prefer vendors that expose trait-level rationales and support regrading workflows.

Data privacy & governance

Follow global guidance (e.g., UNESCO’s 2023 recommendations) for human-centered AI, data minimization, and teacher/student agency.

Over-automation risk

Avoid “set-and-forget.” Use confidence thresholds to blend AI speed with human judgment where it matters most.

Implementation guardrails (use in your policy).

- Publish your rubrics, model versions, and regrade SLAs.

- Log all scoring events; sample N% for human audit.

- Report disparity metrics (e.g., score error by subgroup) each term.

Future of Automated Grading Systems for Scalable Education

Expect AI-first pipelines with human review on edge cases. Neural models will keep improving, but governance will tighten.

What’s coming next?

- Richer explainability: Model rationales tied directly to rubric evidence.

- Generative feedback: Drafting actionable, formative advice that teachers can approve or amend.

- Policy alignment: Systems embody ethical, human-centric standards like those advocated by UNESCO—privacy, equity, transparency. UNESCO+1

- Plug-and-play grading stacks: Standardized APIs between LMS, proctoring, identity, and AI scoring, reducing vendor lock-in.

Founder tip: choose vendors with clear roadmaps for explainability and compliance—those will age well under emerging AI policies.

Case Study Spotlight (Mini, Practical, and Verifiable)

1) ETS e-rater (high-volume writing assessment).

What it does: Extracts features (grammar, mechanics, organization, lexical complexity) and predicts rubric-aligned writing scores; used alongside human raters for exams like TOEFL/GRE.

Scalability angle: Stateless scoring + strong agreement with human raters for appropriate tasks; supports fast turnaround for global test windows.

2) Turnitin Gradescope

AI-assisted answer grouping clusters similar responses so instructors grade a group once, then apply across hundreds of papers.

Scalability angle: Massive time savings in large STEM and CS courses; supports paper-based or digital workflows; integrates into institutional LMS.

3) Open edX & Coursera

- Open edX supports external autograders via XQueue, enabling scalable code testing and automated feedback.

- Coursera uses auto-graded quizzes and has introduced AI-assisted peer review to speed and scale qualitative feedback.

- Scalability angle: Queue-based async processing and containerized graders (e.g., Docker) allow courses to expand to tens of thousands without reviewer backlogs.

4) Pearson

Intelligent Essay Assessor (IEA) scores essays/short answers; Versant auto-scores spoken language.

Scalability angle: Multi-skill assessment (writing + speaking) at global test volumes, reducing cycle time to results.

Action Panels

Pro Tips (fast wins)

- Start with hybrid: auto-grade everything, human-review 10–20% of low-confidence cases.

- Require rubric-aligned explanations in feedback to build trust.

- Set SLOs: median turnaround under 5 minutes; 99th-percentile under 30 minutes during peak.

ROI Box

- Cost drivers: grader hours, regrading rounds, learner support load.

- Savings levers: answer grouping, rubric libraries, instant feedback (fewer tickets), reduced churn from slow grades.

- Rule of thumb: If you cross 500+ submissions/week, automation typically pays for itself (via time saved + retention uplift).

Before You Leave!

If you’re scaling fast or aiming to, grading shouldn’t be the bottleneck holding you back.

A thoughtfully designed automated grading system delivers speed, consistency, and deep analytics, while a human-in-the-loop approach ensures trust and fairness in high-stakes evaluations.

The result? More time to teach, coach, innovate, and grow your business instead of drowning in manual work.

Here, we empower entrepreneurs, solopreneurs, educators, teachers, learners, and students with tools that make grading smarter, scalable, and more impactful. Whether you’re running a micro-course or an entire EdTech platform, we help you focus on what matters most: creating transformative learning experiences.

Don’t let grading limit your growth. Partner with Kogents AI today and drop an email at info@kogents.ai or give us a call at (267) 248-9454 and redefine what’s possible for your classroom, your students, and your business.

FAQs

How do automated grading systems work?

They use AI, ML, and NLP to analyze and score student responses digitally.

What are the benefits of using AI for grading exams?

Faster turnaround, reduced costs, and consistent accuracy.

How accurate are automated grading systems compared to humans?

Up to 90% accurate, though oversight is recommended for essays.

What are the limitations of automated grading systems?

Bias, data privacy risks, and difficulty with creative writing.

Can small businesses use automated grading software?

Yes, solopreneurs and startups can integrate it into online courses.

What is the difference between automated grading and traditional grading?

Automated grading is faster, scalable, and data-driven; traditional grading is slower and labor-intensive.

What is the cost of implementing an automated grading system?

Varies, SaaS models start from a few hundred dollars monthly.

What companies provide AI-powered grading software?

Pearson, ETS, Turnitin, Google Cloud AI, Microsoft Azure Cognitive Services.

Is AI grading ethical?

Yes, but institutions must address bias, transparency, and accountability.

How will automated grading systems evolve in the future?

Expect hybrid human-AI models, adaptive testing, and blockchain for secure exams.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.