AI Automation Testing Implementation Methodology for Mission-Critical AI Systems

Summary:

This age calls for artificial intelligence-driven systems that literally determine human lives, business continuity, and national security; the stakes around quality assurance have never been higher.

Picture a self‐driving car, a diagnostic AI for healthcare, or an autonomous defence system; any failure, bug, or unintended behaviour could provoke catastrophic outcomes.

That’s why taking a standard test automation approach simply won’t cut it. What you need is AI automation testing, an advanced, intelligent, adaptive regime of software quality assurance that doesn’t just check boxes, but reasons, learns, and evolves in tandem with the system under test.

In this blog, you will discover a full‐fledged implementation methodology for deploying AI automation testing in mission-critical AI systems. So, delve now!

Key Takeaways

- AI automation testing elevates QA from scripted, brittle automation to intelligent, adaptive systems that learn and self-heal.

- Mission-critical AI systems demand specialised test strategies: synthetic data, defect-prediction models, anomaly detection, and continuous validation.

- Integration with CI/CD, DevOps, QA, and tool ecosystems is essential; test automation cannot be an afterthought.

- Measuring value is key: implement the right metrics for test-coverage optimisation, ROI, defect-escape rate, self-healing rate, and reliability.

- Governance, scaling, and maintenance matter: even intelligent frameworks can degrade without oversight, versioning, and continuous improvement built in.

Why does AI automation testing matter for mission-critical AI systems?

The term AI automation testing and its variations, such as AI for automation, have rapidly advanced over recent years.

Traditional automation frameworks struggle with complexity, fragility, maintenance overhead, and scale.

According to a systematic literature review, AI techniques have been applied to test-case generation, defect prediction, test-case prioritization, and more, improving coverage, reducing manual effort, and enhancing fault detection.

In mission-critical AI systems, you confront additional unique risks: model drift, adversarial inputs, ethical/regulatory constraints, real-time performance requirements, and high availability demands.

Key reasons why AI automation testing is vital:

- Scale & Complexity: AI systems may involve thousands of data permutations, complex model decision paths, edge cases, and continuous learning loops, making manual or traditional automation insufficient.

- Adaptivity: Models change, data evolves, and new behaviour emerges; test automation must adapt dynamically.

- Safety & Reliability: Mission‐critical systems can’t fail silently; you need predictive defect analytics, anomaly detection, and continuous test-coverage optimisation.

- Continuous Delivery & DevOps: Modern AI systems deploy often; test automation must integrate in CI/CD to keep pace.

- Governance and Auditability: Certifications like ISO/IEC JTC 1/SC 7 25010 for quality and ISO/IEC 27001 for security matters, test frameworks must support traceability, versioning, ethical/AI compliance.

Defining the scope: What “Mission-critical AI systems” entail?

These are systems where failure, major defect, or unmitigated drift will lead to significant harm, human safety, financial loss, regulatory non-compliance, reputational catastrophe, or national security exposure.

Examples include:

- Autonomous vehicles or aircraft (self-driving cars, drones)

- Medical diagnostic AI or robotic surgery assistants

- Financial trading systems using ML for real-time trading

- Military/defence autonomous systems

- Critical infrastructure control systems (power grid, utilities) with embedded AI

Implementation methodology – step-by-step

Each phase ties into the core and variation keywords; you’ll see how AI-based automated testing, AI-enabled test frameworks, predictive test automation, self-healing automation, and adaptive testing are all built in.

A: Strategy and Governance

- Establish Test Automation Strategy: Understand why you need AI automation testing.

- Identify stakeholders: QA leads, ML engineers, DevOps, compliance/regulatory.

- Risk assessment: Map mission-critical components, model failure modes, data-drift risk, and regulatory impact (e.g., for healthcare AI).

- Define KPIs: test-coverage optimization, self-healing failure reduction, time-to-detect anomaly, and ROI of test automation.

- Governance & compliance: Align with standards (e.g., ISTQB AI Testing Specialist certification, ISO/IEC 25010).

- Build a cross-functional team: QA automation, ML assurance, DevOps, security.

B: Architecture & Tool Selection

- Does your system use traditional scripted automation (e.g., Selenium, Appium, Cypress) alongside AI-enabled frameworks?

- Select AI test automation tools: e.g., mabl, Tricentis, SmartBear, Applitools, Testim. Recent research identified ~55 tools in a systematic review.

- Architecture must accommodate continuous testing, integration with CI/CD pipeline, test-data management, logging/telemetry for anomaly detection.

- Design for extensibility: modules for synthetic test data generation, defect-prediction analytics, and self-healing test scripts.

- Choose metrics and dashboards early (test‐suite reliability, defect escape, test-cycle time, self-healing rate).

C: Test-Data Preparation and Synthetic Test Data Generation

- For AI models, the input space is huge: they need high-quality, varied, representative data and edge/adversarial scenarios.

- Use synthetic test data generation: either via generative-AI, data-augmentation, or simulation.

- Ensure data privacy/compliance (esp. in healthcare/finance).

- Incorporate anomaly detection for data drift, out-of-distribution inputs.

- Prepare labelled data for defect-prediction modelling and for regression automation.

- Ensure test-coverage optimisation across data dimensions: classes, edge cases, adversarial attacks, fairness/unbiased dimensions.

D: Test-Case Generation, Prioritisation & Coverage Optimisation

- Leverage ML techniques for test-case generation and test-case prioritisation

- Use model-based testing, metamorphic testing, and fuzzing for AI model inputs.

- Define coverage metrics: code coverage, model-decision path coverage, data path coverage, and adversarial input coverage.

- Automate regression sets and prioritise flows via adaptive testing; when system changes occur, the test suite adjusts.

- Incorporate defect prediction models to anticipate where bugs are likely and allocate test effort accordingly.

E: Building the AI-Enabled Test Framework

- Self-healing test scripts: when UI structure or model API changes, scripts adapt automatically (part of “self-healing automation”).

- Autonomous testing agents: AI agents benefit from discovering new flows, generating tests, and monitor system behaviour.

- Intelligent heuristics: use computer vision, NLP for UI testing, and model output validation.

- Built-in analytics: combine test results with telemetry and defect data to feed back into test design.

- Scriptless interfaces: enabling non-technical testers to define test scenarios via natural language (highlighting AI in software testing).

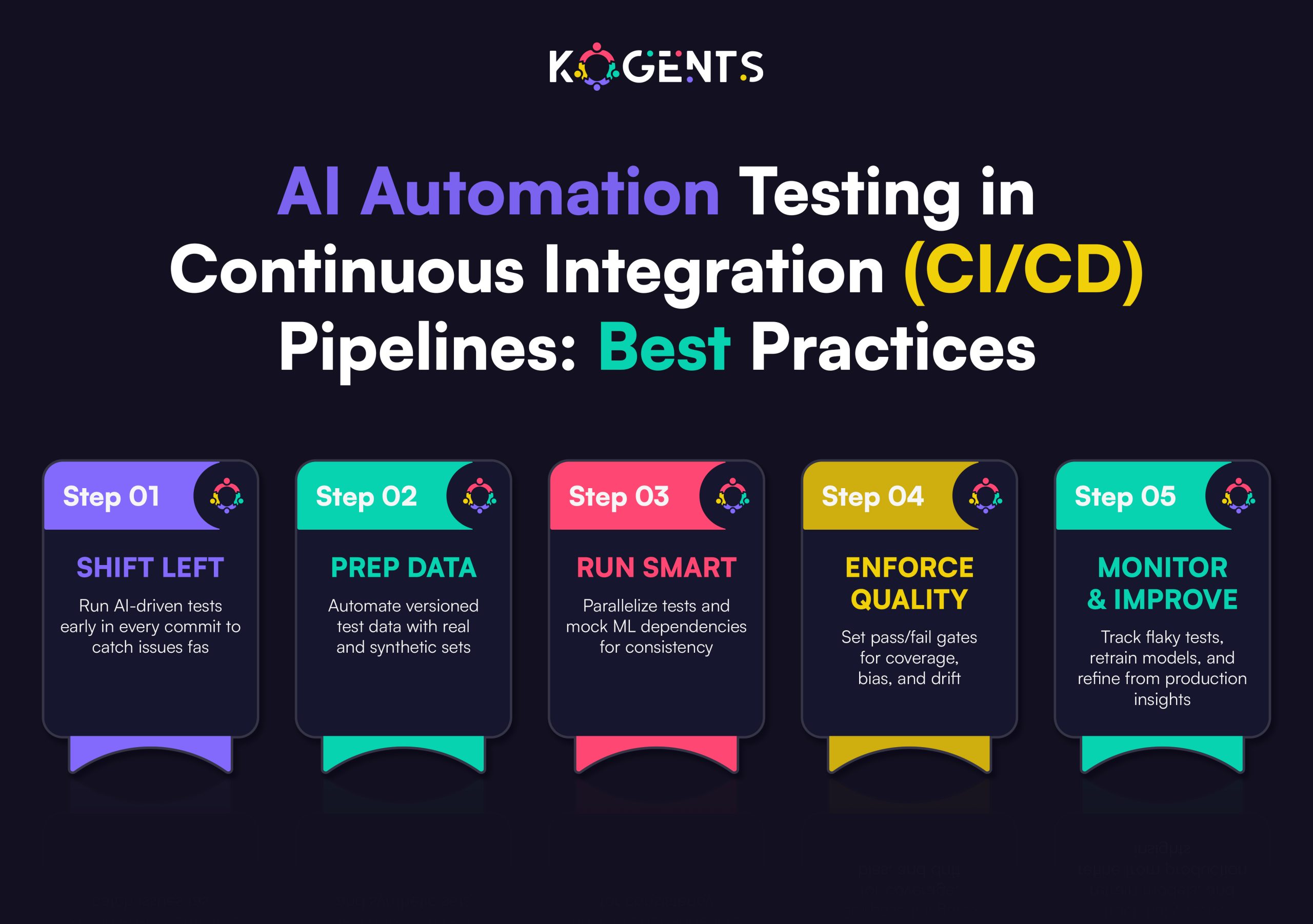

F: Integrating with CI/CD & DevOps QA Pipelines

- Integrate test suite execution into CI/CD pipeline (build → unit tests → AI-enabled tests → staging → production).

- Shift-left testing: earlier in the lifecycle, earlier detection of issues.

- Monitor metrics: test-cycle time, failure rate, defect escape, and time to fix.

- Implement feedback loops: production telemetry → test‐suite updates → refined test generator.

- Align with DevOps QA culture: cross-team collaboration, automated feedback, quality gate enforcement.

G: Self-healing, Adaptive & Predictive Test Automation

- Self-healing automation: Test scripts detect when UI or API changes and adapt without manual intervention.

- Predictive test automation: Using ML/analytics to forecast defect-prone modules or flows and trigger targeted tests.

- Adaptive testing workflows: When the system under test evolves, e.g., a new model version, a changed behaviour, the test suite adapts automatically or semi-automatically.

- Leverage anomaly detection in production data to inform new test case generation.

H: Performance, Security, Anomaly Detection & Monitoring in AI Systems

- Performance test automation: generate load on model APIs, simulate high-traffic or extreme conditions.

- Security testing: adversarial inputs to ML models, vulnerability scanning, and fuzzing of AI modules.

- Anomaly detection: monitor production model behaviour for drift, out-of-distribution inputs, and unexplained decisions.

- Reliability & fail-over testing: ensure backup systems, redundant channels, and graceful degradation.

- Compliance: test audit trails, traceability, explainability (XAI), and ensure QA trace aligns with regulations.

I: Validation, Verification, Metric Tracking & ROI of Test Automation

- Defect escape rate

- Test-cycle time reduction

- Self-healing rate

- Test-coverage improvement

- ROI of test automation: cost saved, defects found earlier, production defects avoided

- Test-suite reliability

- Mean time to detect an anomaly in model production

- Percentage of automated tests vs manual

J: Maintenance, Scale-Up, Governance, and Continuous Improvement

- Maintenance of test scripts, frameworks, and tool integrations. Even intelligent frameworks need upkeep.

- Scale-up: As AI system complexity grows, test automation needs to scale accordingly via modularity, cloud execution, and parallelisation.

- Governance: Version control, audit logs, test plan approvals, compliance checklists.

- Continuous improvement: Use telemetry and analytics to refine test-case generation, adapt to drift, retire obsolete tests, and refine metrics.

- Ethical considerations: especially in AI systems (bias testing, fairness, explainability).

- Documentation & certification: Align with QA standards, ensure auditability.

Case Study Spotlight

Case Study: Financial-Trading AI Platform

A global investment bank deployed a machine-learning-based trading algorithm that makes real-time decisions across multiple asset classes.

The system qualified as mission-critical due to financial exposure, regulatory oversight, and 24/7 live operations.

Implementation Highlights

- Introduced synthetic test data to simulate real and extreme market conditions, including high-volatility and “flash crash” scenarios.

- Deployed a predictive defect analytics module to prioritise test cases using historical defect data, focusing on high-risk trading modules.

- Integrated an AI-enabled, self-healing automation framework within the CI/CD pipeline to enable continuous testing, anomaly detection, and adaptive test flow execution.

Outcomes

- Achieved a 40% reduction in defect escape rate, a 6× faster test cycle (24 h → 4 h), and a 30% improvement in decision-path coverage within six months.

- Self-healing automation cut flaky-test failures by 25%, while early model-drift detection prevented potential trading losses.

Summary of Implementation Methodology

| Phase | Key Activities | Metrics / Tools | Typical Challenges |

| Strategy & Governance | Define goals, risk profile, and KPIs | Defect escape rate, ROI, ISO audit metrics | Lack of stakeholder alignment |

| Architecture & Tool Selection | Choose stack, AI-test tools, CI/CD integration | Tool adoption rate, automation coverage | Tool fragmentation, integrations |

| Test-Data Preparation | Synthetic data, data-drift modelling | Data-coverage %, adversarial case count | Data privacy, edge-case completeness |

| Test-Case Generation & Coverage | ML-based test generation, prioritisation | Test-suite size, coverage, defect density | Test-generation reliability, maintainability |

| AI-Enabled Test Framework | Self-healing scripts, autonomous agents | Self-healing rate, script failure rate | Complexity, team learning curve |

| CI/CD & DevOps QA Integration | Integrate into pipeline, shift-left | Build-to-test cycle time, feedback loop latency | Pipeline bottlenecks, flaky tests |

| Self-healing / Adaptive / Predictive Automation | Adapt to changes, anticipate defects | Prediction accuracy, adaptive coverage | Model drift, false positives |

| Performance/Security/Anomaly Testing | Load, adversarial, anomaly detection | Response times, security incidents, and rift alarms | Complexity, tool overlap |

| Validation & ROI Tracking | Measure value, QA traceability | ROI %, mean time to defect detection | Data collection, accurate measurement |

| Maintenance & Scale-Up | Governance, scaling, continuous improvement | Test-suite growth, maintenance cost, compliance audit pass-rate | Technical debt, framework rot |

Conclusion

Implementing AI automation testing for mission-critical AI systems is not a luxury; it’s a necessity.

By following the methodology outlined here, strategy, architecture, synthetic data, intelligent frameworks, self-healing, integration with CI/CD, non-functional testing, metrics, governance, and maintenance, you can move from brittle test suites to a robust AI-enabled test framework.

At Kogents.ai, we empower organisations to transcend traditional QA, leveraging intelligent test automation, predictive test analytics, and autonomous testing agents. So, get in touch now with the best agentic AI company in town!

FAQs

What is AI automation testing?

AI automation testing is the practice of using artificial intelligence in test automation to design, generate, prioritise, execute, and maintain test cases in a more intelligent, adaptive, and autonomous way. It extends beyond traditional scripted automation by enabling AI-driven test automation, self-healing automation, predictive test automation, and adaptive testing workflows.

What are the benefits of using AI-based QA automation in mission-critical systems?

Benefits include greater reliability with fewer defects, faster test cycles through continuous testing, and broader coverage of complex AI features. Self-healing automation cuts maintenance costs while boosting ROI. It also enables proactive detection of anomalies, model drift, and security issues.

Which tools are available for AI test automation in 2025?

Examples include:

- mabl — focuses on intelligent functional test automation with ML backing.

- Tricentis offers AI-enabled automation for enterprise testing and risk-based testing.

- SmartBear — provides vision-based testing and automation with AI capabilities.

- Applitools — specialised in visual-AI testing, anomaly detection in UI/UX.

- Testim — emphasises self-healing test automation scripts.

What are the challenges of deploying AI-based automated testing?

Key challenges include data quality, model drift, immature tools, and high maintenance demands. Teams also face skill gaps, compliance pressures, and difficulty measuring ROI without solid baselines.

How do you test for model-drift, adversarial inputs, and anomalies in AI systems?

Use synthetic and adversarial test data to simulate edge cases, detect anomalies, and track model drift. Automate fuzzing, high-risk tests, and anomaly monitoring to maintain AI reliability and security.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.